This edition at a glance:

The highlight - 🤖🇪🇺 the EU AI Act is now approved and awaiting publication

The Council of the European Union approved the AI Act on 21 May 2024, setting harmonized rules for AI systems across the EU. The Act targets providers and deployers of AI systems, including those from outside the EU whose systems are used within the EU. It includes exemptions for AI systems used exclusively for military, defense, and research purposes.

Scope

The AI Act applies to:

- AI system providers and deployers in the EU.

- Third-country providers and deployers if their AI output is used in the EU.

- Importers, distributors, and manufacturers of AI systems.

- Authorized representatives of non-EU providers.

Classification of AI Systems

The Act prohibits AI practices such as cognitive behavioural manipulation, social scoring, predictive policing based on profiling, and biometric categorization by sensitive attributes. It classifies AI systems into risk categories, with high-risk systems requiring strict compliance and a fundamental rights impact assessment for certain public services. General-purpose AI (GPAI) systems face limited requirements unless posing systemic risks.

Enforcement and Penalties

The AI Act establishes an AI Office within the Commission, a scientific panel, an AI Board, and an advisory forum to enforce and advise on the AI Act’s application. Non-compliance can result in fines up to €35 million or 7% of the company’s global annual turnover.

Next Steps

The AI Act will be published in the EU’s Official Journal shortly, taking effect 20 days after publication, with full application in two years, except for some specific provisions.

Being a regulation, the AI Act will apply directly in all EU member states, without the need for national transposition. Still, national implementation laws are to be expected in order to bring into effect procedural aspects, harmonize with other national laws, as well as implement specific provisions of the Act that refer to national law.

You can read the press release here and the AI Act here.

Must reads related to the AI Act

Here is the list you did not know you needed:

- FPF’s Implementation and compliance timeline, here.

- IAPP’s EU AI Act Compliance Matrix, here.

- An overview of the exceptions for AI systems released under free and open-source licenses, here.

- An analysis on biometrics in AI, here.

- Gianclaudio Maglieri’s blog post on Human Vulnerability in the EU Artificial Intelligence Act, here.

- The Euractiv op-ed arguing that the implementation of the Ai Act requires robust leadership and an innovative structure with 5 specialised units (Trust and Safety, Innovation Excellence, International Cooperation, Research & Foresight, and Technical Support), here.

- The actual structure of the AI Office was published a few days later, see details here. It will consist of 5 units and 2 advisors:

- The “Excellence in AI and Robotics” unit;

- The “Regulation and Compliance” unit;

- The “AI Safety” unit;

- The “AI Innovation and Policy Coordination” unit;

- The “AI for Societal Good” unit;

- The Lead Scientific Advisor;

- The Advisor for International Affairs.

🤖 🇺🇸 Colorado AI Consumer Protection Bill signed into Law

On 20 May 2024, the Colorado legislature announced the signing of Senate Bill 24-205 by the Governor. Effective 1 February 2026, the Act mandates significant responsibilities for developers and deployers of ‘high-risk AI systems’ to prevent algorithmic discrimination and ensure transparency and accountability.

Scope

Developers and deployers of ‘high-risk AI systems’ must take reasonable care to prevent algorithmic discrimination. Developers are required to maintain extensive documentation for general-purpose models, including compliance with copyright laws and summaries of training content. Documentation must also include:

- High-level summaries of training data types.

- Known or foreseeable limitations of the AI system.

- The system’s purpose, benefits, and intended uses.

- A detailed evaluation of the system’s performance and mitigation measures before deployment.

Deployers must be informed about data governance measures, intended outputs, and mitigation steps for algorithmic discrimination risks. They must also understand how to use, monitor, and evaluate the AI system during consequential decision-making.

Enforcement

The Attorney General (AG) has exclusive enforcement authority and may implement additional rules. These rules cover documentation, notices, risk management policies, impact assessments, and proof of non-discrimination. Developers must notify deployers and the public about high-risk systems and report any known risks of algorithmic discrimination within 90 days of discovery.

Impact Assessments and Risk Management

The Act requires developers to provide information necessary for impact assessments, including:

- The purpose, context, and benefits of the AI system.

- Risks of algorithmic discrimination and mitigation steps.

- Categories of processed data and outputs.

- Performance metrics and limitations.

- Transparency measures and post-deployment monitoring.

Deployers must implement a risk management program, conduct annual impact assessments, and notify consumers if a consequential decision is made by an AI system. They must also make public statements about high-risk systems and report algorithmic discrimination to the AG within 90 days.

The Colorado AI Act sets a precedent in the U.S. for comprehensive state-level regulation of AI, focusing on preventing discrimination and promoting transparency.

The full text of the Act and its legislative history can be accessed here.

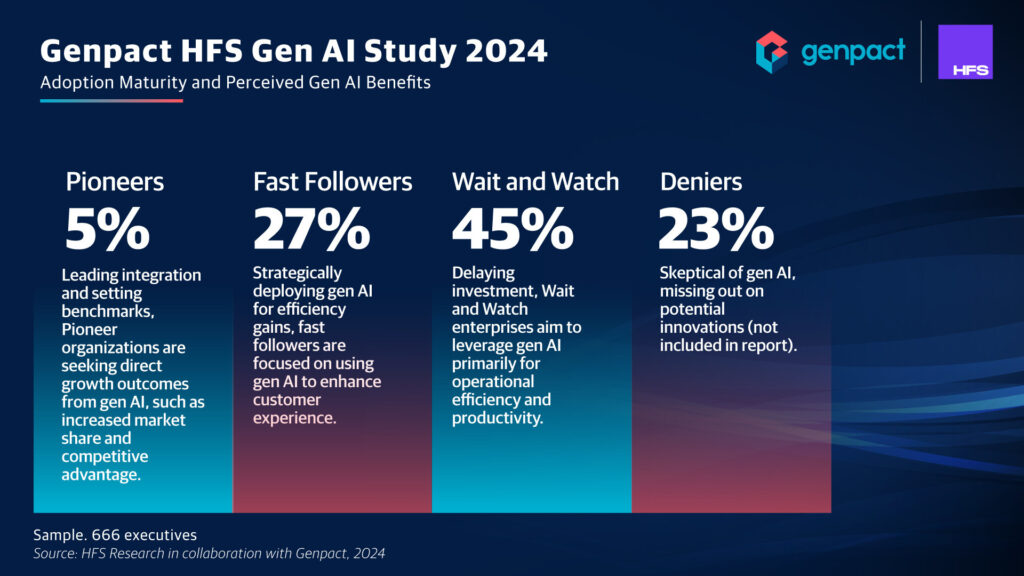

📈 Genpact Report divides enterprises into four generative AI maturity levels

Genpact and HFS Research have published a report, “The Two-year Gen AI Countdown,” emphasizing the urgent need for enterprises to leverage generative AI (gen AI) within the next two years to avoid competitive setbacks. Based on a survey of 550 senior executives from companies with revenues of $1 billion or more across 12 countries and 8 industries, the report highlights the early stages of gen AI adoption and substantial investment efforts.

The study shows that only 5% of enterprises have achieved mature gen AI initiatives, but 61% allocate up to 10% of their tech budgets to accelerate gen AI adoption. Health care, retail, and high-tech sectors are the most proactive, redirecting funds towards gen AI. Conversely, banking, capital markets, and insurance sectors prefer dedicated budgets, while life sciences employ a dual strategy of using anticipated savings and external funding.

The report categorizes enterprises into four gen AI maturity levels:

- Pioneers (5%): Leading in integration and seeking direct growth outcomes like market share increase and competitive advantage.

- Fast Followers (27%): Deploying gen AI strategically for efficiency and customer experience enhancement.

- Wait and Watch (45%): Delaying investment, focusing on operational efficiency and productivity.

- Deniers (23%): Skeptical and missing out on potential innovations.

Click here for full report.

🛡️ Leading AI Companies Sign Safety Pledge to Mitigate Risks

On 21 May 2024, leading AI companies signed a new set of voluntary AI safety commitments, as announced by the UK and South Korean governments. This includes major tech firms like Amazon, Google, Meta, Microsoft, OpenAI, xAI, and Zhipu AI. The companies pledged to halt the development or deployment of AI models if severe risks cannot be adequately addressed, marking a significant step in global AI governance.

Companies will publish frameworks to assess risks and set thresholds for intolerable risks. They will also detail how mitigations will be implemented and ensure transparency, although some details might remain confidential to protect sensitive information.

The enforcement of these new commitments remains uncertain. The pledge specifies that the 16 companies will provide public transparency on their implementations, barring cases where it might increase risks or reveal sensitive information.

Read more here.

🤖 EU And 27 Other Countries Commit to Address Severe AI Risks

On 22 May 2024, the AI Seoul Summit concluded with 27 nations (Australia, Canada, Chile, France, Germany, India, Indonesia, Israel, Italy, Japan, Kenya, Mexico, the Netherlands, Nigeria, New Zealand, the Philippines, the Republic of Korea, Rwanda, the Kingdom of Saudi Arabia, the Republic of Singapore, Spain, Switzerland, Türkiye, Ukraine, the United Arab Emirates, the United Kingdom, the United States of America) plus and the European Union committing to address severe AI risks through collaborative efforts.

The agreement focuses on establishing shared risk thresholds for frontier AI development, particularly in contexts where AI could assist in the development of biological and chemical weapons or evade human oversight. This landmark decision marks the first step towards creating global standards for AI safety.

The countries will work together to create AI safety testing and evaluation guidelines, involving AI companies, civil society, and academia in the process. These proposals will be discussed at the AI Action Summit in France.

Read more here.

📊 Interim Report on the Safety of Advanced AI: Insights from Global Experts

The International Scientific interim report, published in May 2024, is a comprehensive analysis of the safety of advanced AI, produced by 75 experts from 30 countries, the EU, and the UN. This report, intended to inform discussions at the AI Seoul Summit 2024, focuses on general-purpose AI and its implications for society.

Potential Benefits and Risks: General-purpose AI, if governed well, can advance public interest, improving wellbeing, prosperity, and scientific progress. However, it also poses significant risks, such as biased decision-making, privacy breaches, and misuse in creating fake media or scams. Experts emphasize that the societal impact of AI is subject to the effectiveness of governance and the pace of technological advancements.

Key Findings:

- Uncertainty in Progress: There is no consensus on the rate of future AI advancements. Some experts predict a slowdown, while others foresee rapid progress. This uncertainty makes predicting AI’s future trajectory challenging.

- Technical Limitations: Current methods for assessing and mitigating AI risks have significant limitations. For instance, techniques for explaining AI outputs are still rudimentary, and assessing downstream societal impacts remains complex.

- Potential for Harm: Malfunctioning or malicious AI can cause significant harm. Examples include biased decisions in high-stakes areas and AI-enabled cyberattacks. Moreover, the societal control over AI development and deployment is a contentious issue among experts.

- Economic and Societal Impact: General-purpose AI could profoundly affect labour markets and societal structures. It raises concerns about job displacement and the creation of new economic divides. The concentration of AI development in a few regions also poses systemic risks.

- Environmental Concerns: The increasing compute power required for AI development has led to higher energy consumption and associated environmental impacts.

Technical Approaches to Risk Mitigation: While several technical methods exist to reduce AI risks, none provide comprehensive guarantees. Techniques such as adversarial training, bias mitigation, and privacy protection are critical but have limitations. The report highlights the need for continuous monitoring and updates to AI systems to address newly discovered vulnerabilities.

The report calls for informed, science-based discussions to guide policy and research efforts, aiming to balance AI’s potential benefits with its inherent risks.

Read the full report here.

📊 OECD Report Highlights AI Impact on Market Competition

On 24 May 2024, the OECD released a working paper titled “Artificial Intelligence, Data and Competition,” which delves into the recent advancements in generative AI and their potential market impacts. While AI promises significant benefits, the paper stresses the importance of maintaining competitive markets to ensure these benefits are widely distributed. The analysis focuses on the three stages of the AI lifecycle: training foundation models, fine-tuning, and deployment.

Key Stages in AI Lifecycle

- Training Foundation Models: Involves substantial data and computing resources. Recent models like GPT-4 required over $100 million for training.

- Fine-Tuning: Adjusting models for specific applications. This stage may be less resource-intensive but requires high-quality, specialized data.

- Deployment: Implementing AI solutions across various sectors, which can vary from subscription services to tailored enterprise solutions.

Competition Risks

The paper identifies several competition risks that need attention:

- Access to Data: High-quality data is crucial for developing competitive AI models. Potential scarcity of data may pose barriers.

- Computing Power: Significant computing resources, predominantly GPUs, are needed. A limited number of suppliers may affect access.

- Market Concentration: The paper notes that existing digital firms might leverage their positions to dominate AI markets, raising concerns about future competition.

Recommendations

The OECD suggests that competition authorities:

- Monitor Market Developments: Regular assessments and market studies to understand AI’s impact on competition.

- Use Advocacy and Enforcement Tools: Employ a range of tools to address potential anti-competitive practices.

- Enhance Cooperation: Both international and domestic cooperation are essential to keep up with rapid AI advancements and maintain technical expertise.

The paper also highlights the potential for AI to assist competition authorities in their roles, such as improving data analysis capabilities. Overall, while generative AI holds promise for economic growth and productivity, ensuring a competitive market environment is crucial for its equitable development and deployment.

Read more here.

🛡️ UK-Canada AI Safety Partnership Announced

On 20 May 2024, the UK and Canada announced a new collaboration to enhance AI safety, confirmed by UK Technology Minister Michelle Donelan and Canadian Minister Champagne. This partnership involves their AI Safety Institutes and aims to deepen ties, share expertise, and facilitate joint research on AI safety.

Key Aspects of the Partnership:

- Expertise Sharing and Joint Research:

- The UK and Canada will share expertise to bolster testing and evaluation efforts.

- They will identify other priority research areas for collaboration.

- Professional Exchanges and Resource Sharing:

- Measures will facilitate professional exchanges and secondments.

- Both countries will leverage and share computational resources.

- International Collaboration:

- The UK and Canadian AISIs will collaborate with the US AISI on systemic AI safety research.

- The partnership aims to develop an international network to advance AI safety science and standards.

You can read the press release here.

📄 EDPB ChatGPT Taskforce Report

The EDPB’s ChatGPT Taskforce, established on 13 April 2023, released an interim report on 23 May 2024, detailing its investigations into OpenAI’s ChatGPT service.

See my separate post on key takeaways from this report, here.

🚀EDPS publishes AI Act Implementation Plan

The European Data Protection Supervisor (EDPS) has outlined a comprehensive plan to implement the EU Artificial Intelligence Act. The EDPS, taking on the role of AI supervisor for EU institutions, aims to ensure that AI tools are used effectively and safely across various sectors including healthcare, sustainability, and public administration.

Key Components of the Plan:

- Governance: AI governance will adopt a multilateral, inter-institutional approach to ensure correct usage within EU public administration. On 14 May 2024, the EDPS organized a meeting on AI Preparedness with Secretaries-General of EU institutions to present its plan and discuss collective approaches. Institutions are establishing internal mechanisms such as AI boards and specialized task forces, which the EDPS plans to enhance by creating a network of diverse “AI correspondents”.

- Risk Management: Effective risk management is essential to avoid paralysis or unmitigated risks. The EDPS emphasizes the need to identify, assess, and categorize AI-related risks. Proposals include implementing common checklists, guidelines, and instructions across EU institutions, and requiring external AI providers to ensure high compliance with the AI Act.

- Supervision: The EDPS plans to establish procedures for handling complaints, enabling individuals to assert their data protection rights, and developing mechanisms for supervising, prohibiting, and sanctioning banned AI uses like biometric categorization and emotion inference.

Read more here.

Additionally, see here the closing remarks by EDPS Wojciech Wiewiórowski delivered at the Computers, Privacy and Data Protection Conference on 24 May, titled “Devising a trajectory towards a just and fair future: the identity of data protection in times of AI”. My favorite part is this:

Data protection and AI are heavily interlinked. However, I am here today with a clear message.

Data protection and privacy will not merge, nor will disperse into Artificial Intelligence.

I am here today to defend data protection and privacy against the risk to confuse them with the AI hype, as this could mean the end of this fundamental right. I am not naive, and you are not a naive community either.

Of course, Artificial Intelligence is fuelled by data, much data that some operators refuse to recognise as ‘personal’ because (they claim) this data has been aggregated or anonymised. But, Artificial intelligence and data protection are different.

The AI Act is conceived and framed as an internal market legislation for commercialising AI systems, something qualitatively and completely different from a tool to ‘protecting fundamental rights’. And, in this act, we will not find any serious answers on how to protect fundamental rights.

In addition, the enforcement of AI rules will not be accompanied by all the safeguards that come with enforcement of data protection ones. For example, the independence of the supervisory authority, which is paramount to protecting citizens’ rights.

Data protection has not turned into “everything AI”. Respect for data protection and privacy is the essential prerequisite to put people at the centre and ahead of technology. We must defend the identity of data protection in protecting humanity.